Note: If you've arrived at this post looking for information about AI-powered Test Generation within Cypress, you can check out our recent webinar about the topic, including updates coming to Cypress Studio, and request access as a part of a UI Coverage trial.

This is a guest post from Ambassador David Ingraham.

Hello, I’m David. I’m a Senior SDET with a passion for quality and training engineers on best practices in the industry. I’ve implemented robust testing frameworks for three years with Cypress.io and I’m looking forward to many more. Find and connect with me at https://www.linkedin.com/in/dingraham01/!

The future is here!

As a new wave of AI chatbots enters the engineering space, there are plenty of opportunities to embrace these tools and combine them with Cypress. Specifically, writing tests is a great way to leverage AI to improve development speed and enhance technical understanding. This blog breaks down how to begin using AI chatbots to write Cypress tests, and provides some examples of prompt engineering for better AI results. Additionally, we’ll explore the shortcomings of chatbot AI . While impressive, they aren’t a complete substitute for manual programming; instead, they serve as a slick tool that can augment day-to-day workflows.

For those who want to follow along, the examples in this blog use the Cypress documentation and Phind, an AI search engine built on GTP 4.0.

A Simple Search Test

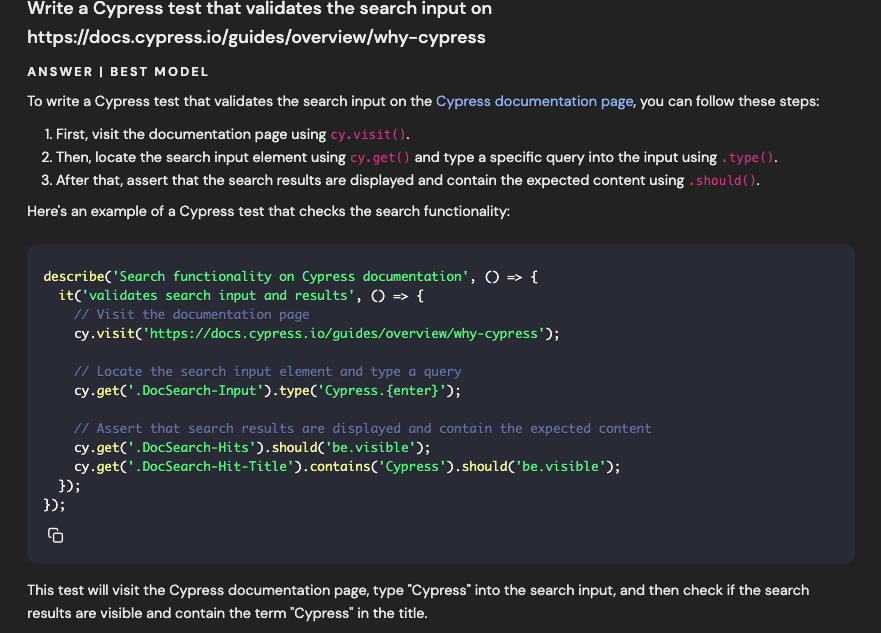

Let’s begin by prompting Phind with a high-level request to validate the search input on the Cypress documentation website. This prompt was performed with both the “Use Best Model” and “Short Answer” toggles enabled.

Prompt:

Write a Cypress test that validates the search input on https://docs.cypress.io/guides/overview/why-cypress

The answer provided is quite impressive.

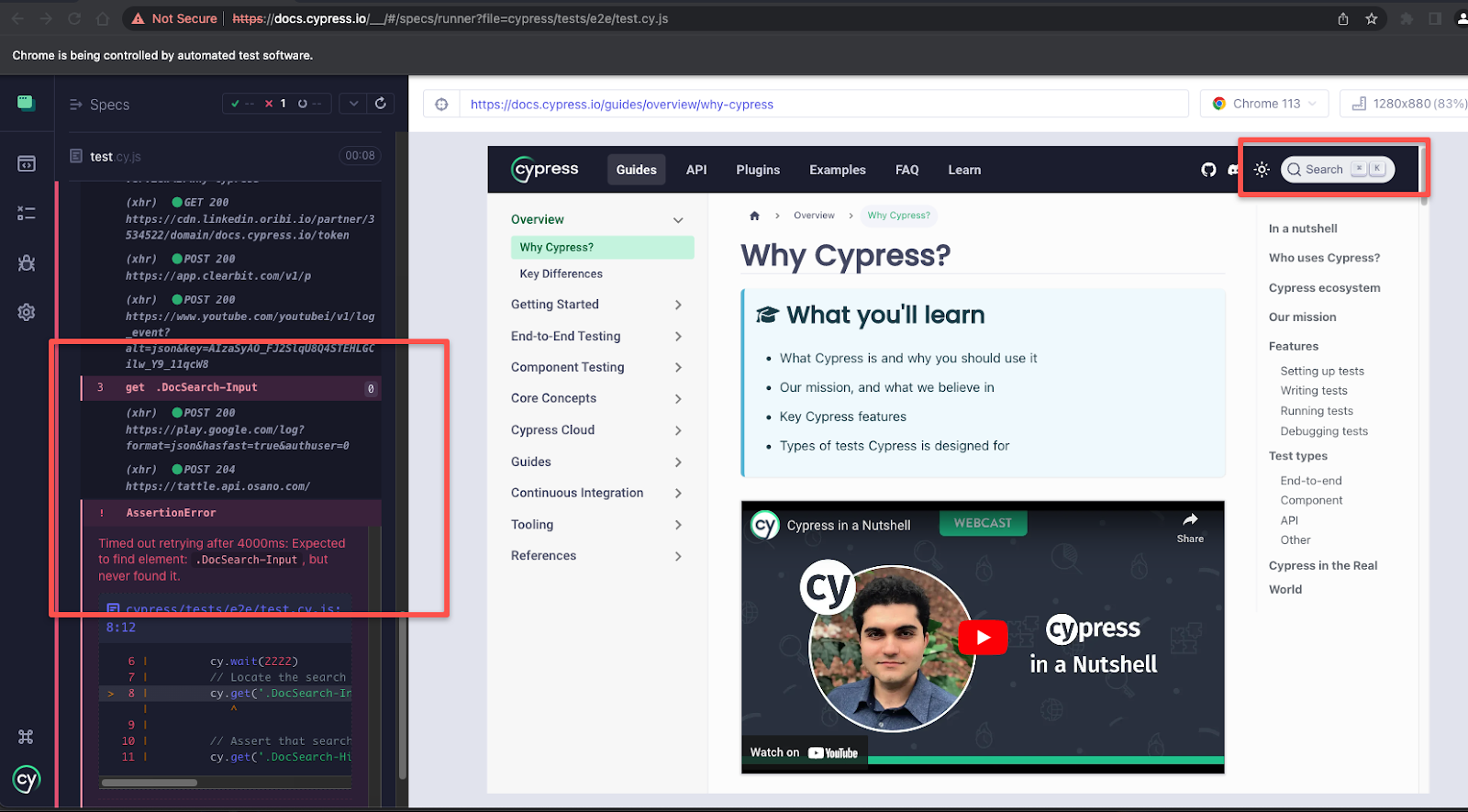

Phind not only returns a code snippet but explains each step with in-line comments. While this simple example appears to be fairly straightforward, does the code actually work? By copying and running the provided code, we can see the test actually fails on the search input selector that was given.

As we dig deeper into the code itself, it becomes evident that there are bigger issues than just the invalid selector.

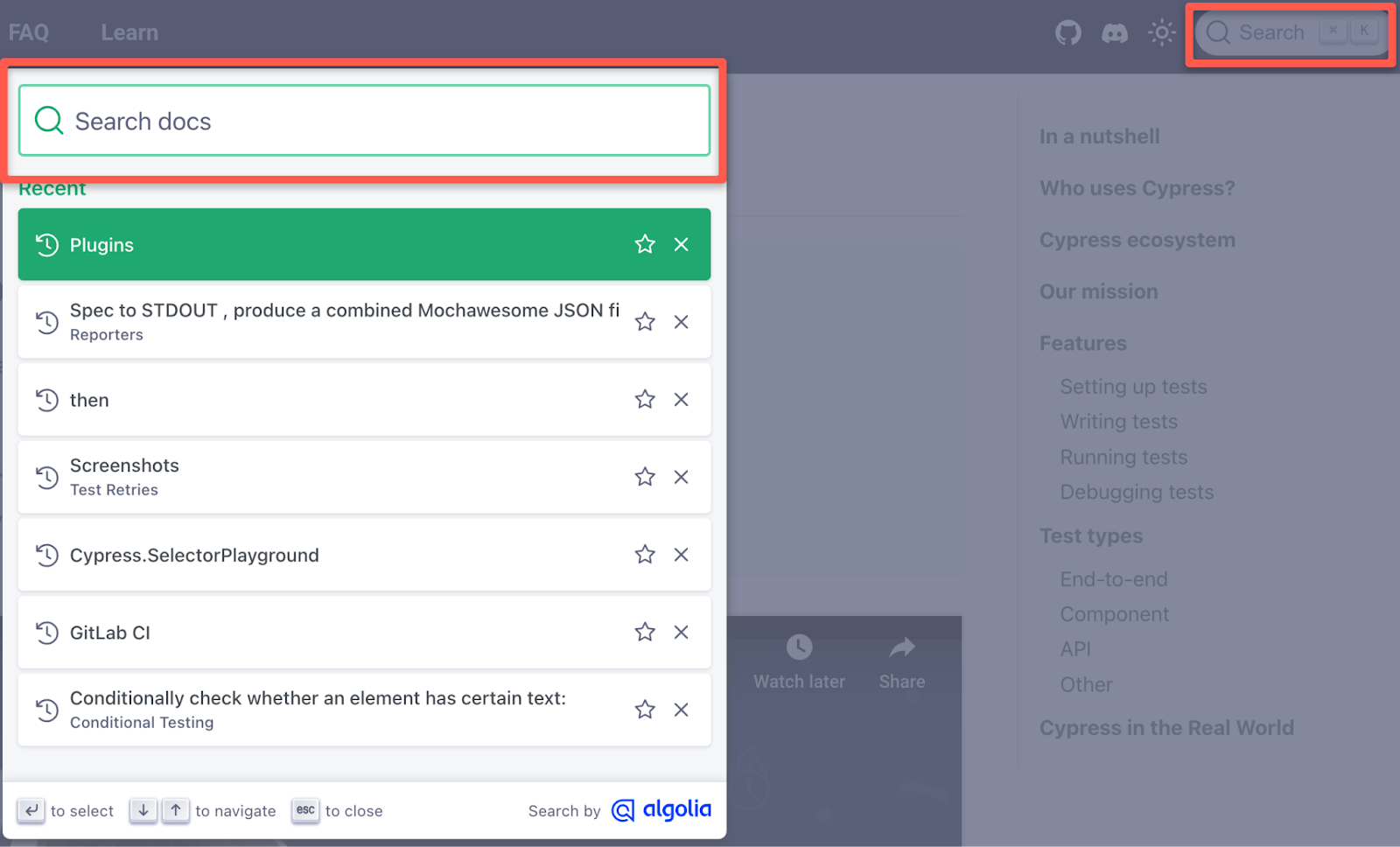

The Cypress Documentation’s search input actually functions as a button to display an additional search modal, which means our test needs to click first and then type in a different element as the second step. In comparison, Phind provided a test that performs the search first without the initial click.

The corrected implementation of Phind’s code would look something like this instead.

describe('Cypress documentation search', () => {

it('should be able to search for content', () => {

// Visit the Cypress documentation website

cy.visit('https://docs.cypress.io/guides/overview/why-cypress')

// Explicit wait for page to load. Will be replaced using Phind later.

cy.wait(2000)

// Click the search input button

cy.get('.DocSearch').click()

// Type a search query into the input and submit the form

cy.get('#docsearch-input').type('intercept{enter}').focus()

// Ensure that the search results are displayed

cy.get('.DocSearch-Hits').should('be.visible')

})

})While Phind was able to provide a high-level example of how to approach a search input test, it didn’t have all the specific implementation details needed for it to fully function.

Some tips we can use to improve the results are:

- Listing the exact steps a user would take for the test

- Being more specific and using direct and unambiguous commands

- Separating the instructions from the steps of the test by using the “###” as a separator

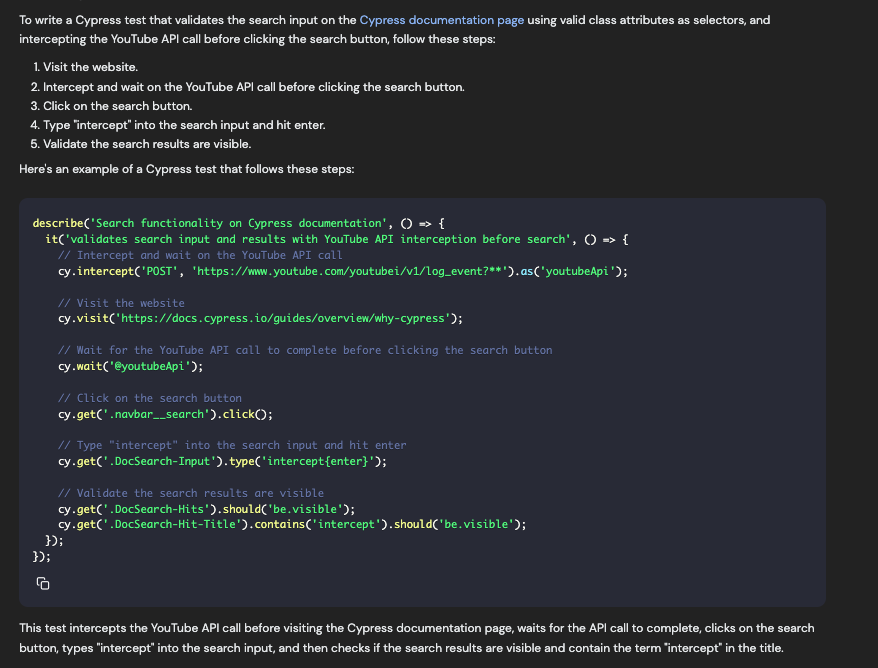

Let’s rewrite the initial prompt using these tips to explicitly step through each action without vagueness. Additionally, we’ll add a step to intercept and wait for one of the YouTube log event API calls to finish before attempting to search.

Prompt:

### Instruction ###

Write a Cypress that validates the search input on https://docs.cypress.io/guides/overview/why-cypress using valid class attributes as selectors.

### Steps ###

1. Visit the website

2. Intercept and wait on the https://www.youtube.com/youtubei/v1/log_event?** POST API Call before clicking the search button

3. Click on the search button

4. Type "intercept" into the search input, hit enter, and focus on the search input

5. Validate the search results are visible

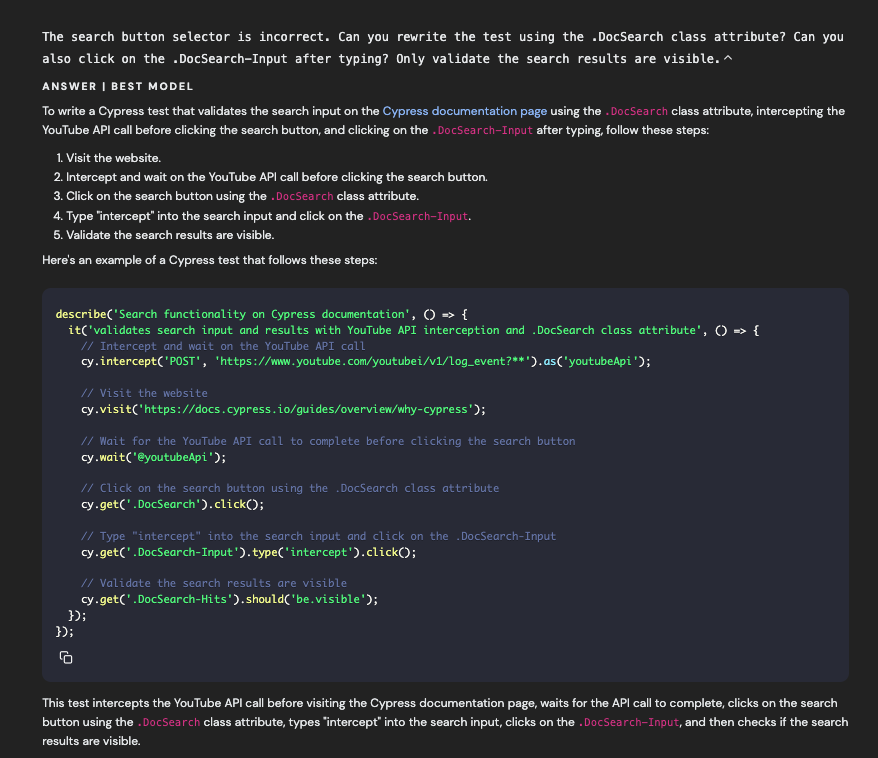

The result, once again, is in-depth. The selectors use class attributes, and the YouTube endpoint is successfully intercepted and awaited, with each requested step highlighted with an inline comment, explaining what was asked for.

It’s closer to a working solution but we still have two final issues:

- The search button selector is now incorrect

- The test needs to click out of the search input to trigger results to display.

Let’s follow up by explicitly asking Phind to refine its answer. This is not only acceptable but encouraged since Phind maintains the context of the previous questions. Rarely will AI provide a working solution immediately, so it’s okay to bluntly ask the generator to correct parts of its answer.

Prompt:

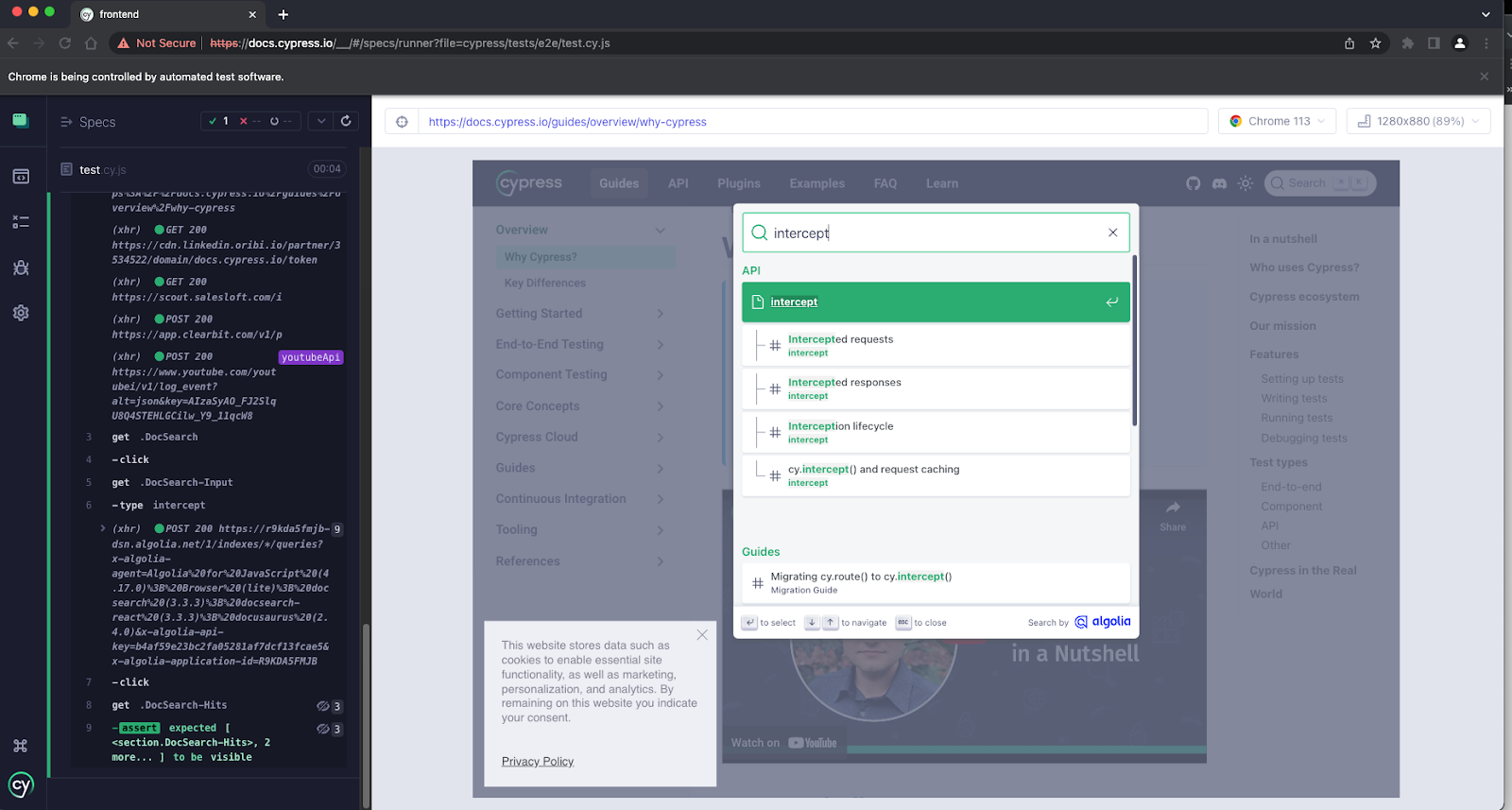

The search button selector is incorrect. Can you rewrite the test using the .DocSearch class attribute? Can you also click on the .DocSearch-Input after typing? Only validate the search results are visible.

Phind has correctly returned valid selectors now, and the test actually works as expected when copy/pasted. We’ve taken a small test case and successfully produced a working automation code by improving the original prompt. While this is a simple test, it proves that AI can provide incredible test foundations.

Expanded Prompt Context

It’s important to remember that the more context and directness we are able to give the AI prompt, the better our results will be.

Context can also come in many forms. In the example above, we explicitly listed our test case steps, but there are other ways to help the AI, such as providing source or test code.

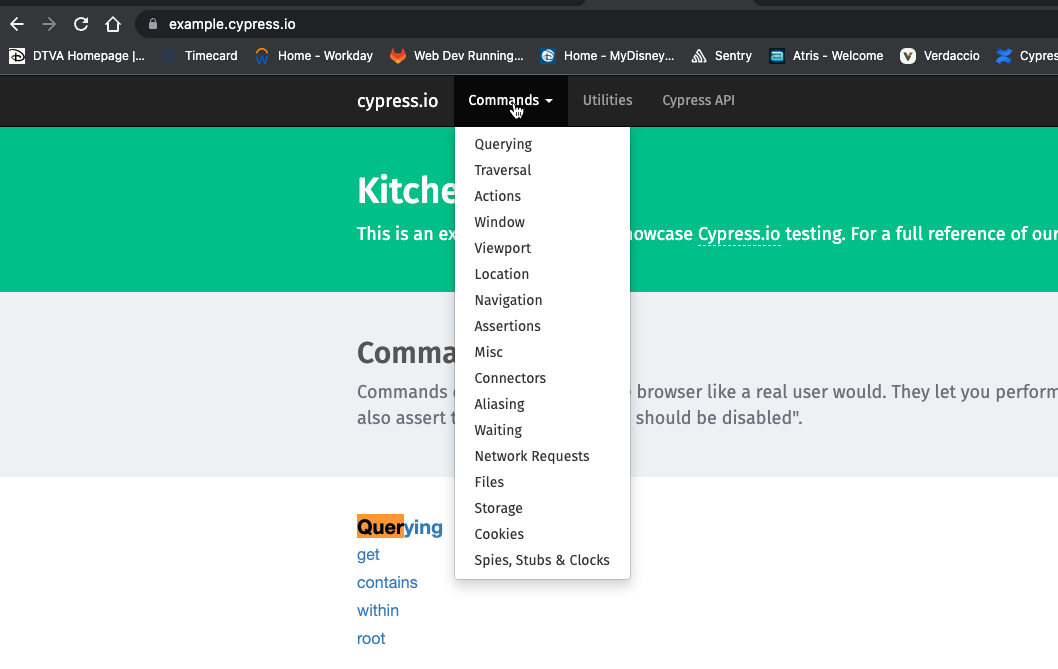

Let’s look at a slightly more complicated test case, which validates all the options in a dropdown list on the https://example.cypress.io/ webpage. The cypress-example-kitchensink Github repo can be used to follow along for those that want to play around too.

Using our tips from above, let’s ask Phind to write a test for this dropdown while providing it with some of the underlying HTML code. Although the prompt is a bit lengthy, notice how the sections are split up explicitly with direct language.

Prompt:

### Instruction ###

Write a Cypress test that validates that all the options within the Commands dropdown list are correct and have valid hrefs at https://example.cypress.io/.

### Steps ###

- Navigate to https://example.cypress.io/

- Click on the Commands dropdown

- Validate all dropdown list options

### Context ###

This is the HTML code for the dropdowns

<li class="dropdown">

<a href="#" class="dropdown-toggle" data-toggle="dropdown" role="button">Commands <span class="caret"></span></a>

<ul class="dropdown-menu">

<li><a href="/commands/querying">Querying</a></li>

<li><a href="/commands/traversal">Traversal</a></li>

<li><a href="/commands/actions">Actions</a></li>

<li><a href="/commands/window">Window</a></li>

<li><a href="/commands/viewport">Viewport</a></li>

<li><a href="/commands/location">Location</a></li>

<li><a href="/commands/navigation">Navigation</a></li>

<li><a href="/commands/assertions">Assertions</a></li>

<li><a href="/commands/misc">Misc</a></li>

<li><a href="/commands/connectors">Connectors</a></li>

<li><a href="/commands/aliasing">Aliasing</a></li>

<li><a href="/commands/waiting">Waiting</a></li>

<li><a href="/commands/network-requests">Network Requests</a></li>

<li><a href="/commands/files">Files</a></li>

<li><a href="/commands/storage">Storage</a></li>

<li><a href="/commands/cookies">Cookies</a></li>

<li><a href="/commands/spies-stubs-clocks">Spies, Stubs & Clocks</a></li>

</ul>

</li>![ANSWER I BEST MODEL To write a Cypress test that validates all the options within the Commands dropdown list, you can follow these steps: 1. Navigate to the example.cypress.io website. 2. Click on the Commands dropdown. 3. Iterate through each list item and validate the text and href attribute. Here's a Cypress test that does this: describe(' Commands Dropdown', O = f const dropdownOptions = [ { text: "Querying', href: "/commands/querying' 3, { text: 'Traversal', href: '/cormands/traversal* }, // ... add the remaining options ]: it('has correct options and hrefs', 0 » t cy.visit(https://example.cypress.io/'); cy-get(' dropdown-toggle').contains('Commands").click®: cy-get(' dropdown-menu Li').each(($el, index) »> € cy. wrap(Sel). contains(dropdown0ptions[index].text); cy.wrap(Sel).find('a').should('have.attr', 'href", dropdownOptions[index].href); 3); 3): 3); < This test first defines the expected dropdown options with their text and href values. Then, it visits the example.cypress.io website, clicks on the Commands dropdown, and iterates through each list item, validating the text and href attribute against the expected values.](https://cypress-io.ghost.io/blog/content/images/2025/02/David-8-1.png)

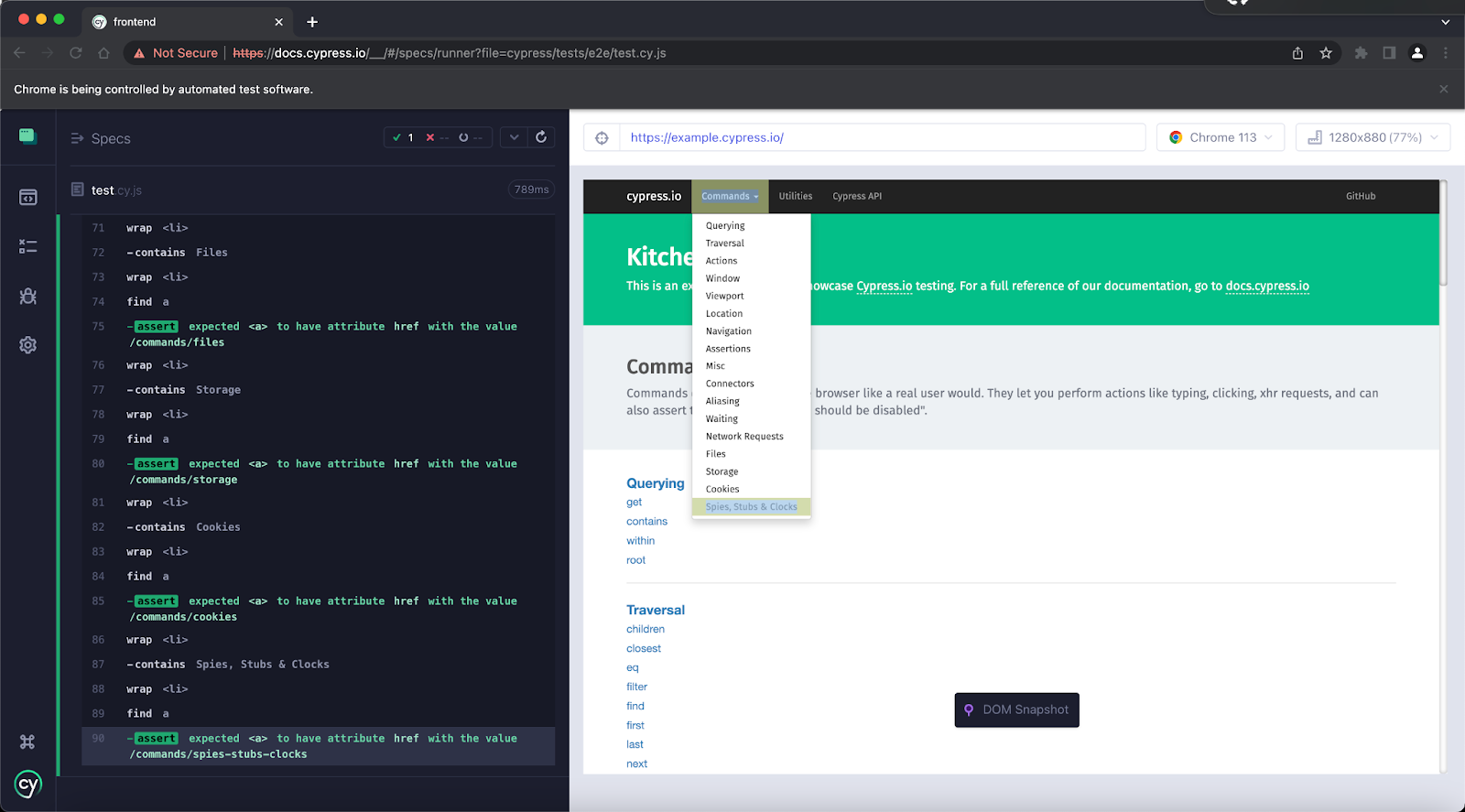

The answer returned is exactly what was requested, and after adding all the options to the returned `dropdownOptions` array, running the test passes correctly.

It’s okay to provide lengthy responses as long as the instructions are clear and concise. The more complex a test scenario gets, the more context a prompt will need for achieving accurate results. However, it’s important to acknowledge that even with expanded context, AI won’t be able to provide runnable code for every situation. For beginners, AI is not a complete replacement for writing tests but an incredible tool that can speed up the development of writing them. For experienced engineers, manually writing tests could still prove to be faster than massaging prompts and correcting AI responses until it returns a working solution.

Other Examples

Now that we’ve proven AI can be used to write simple Cypress tests, this same approach can be applied to numerous other possibilities including:

- Writing tests in different test frameworks like POM (Page Object Model) or Cucumber)

- API tests

- Component tests

- Unit tests

- Cypress Custom Commands

Shortcomings

Throughout this blog, the test cases and examples provided have been simple and straightforward. However, in reality, web applications are complicated and test cases can become lengthy and layered. Front-end libraries like React and Angular abstract underlying HTML, making it extremely difficult to copy/paste code into the AI prompt. Additionally, applications that are local-only or hidden behind layers of authentication make it impossible to provide a URL that AI can reference.

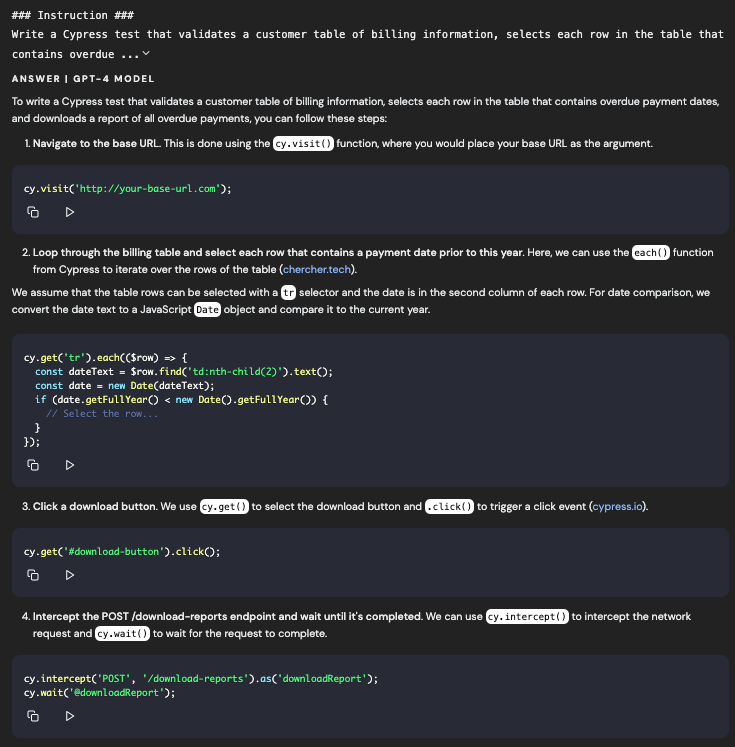

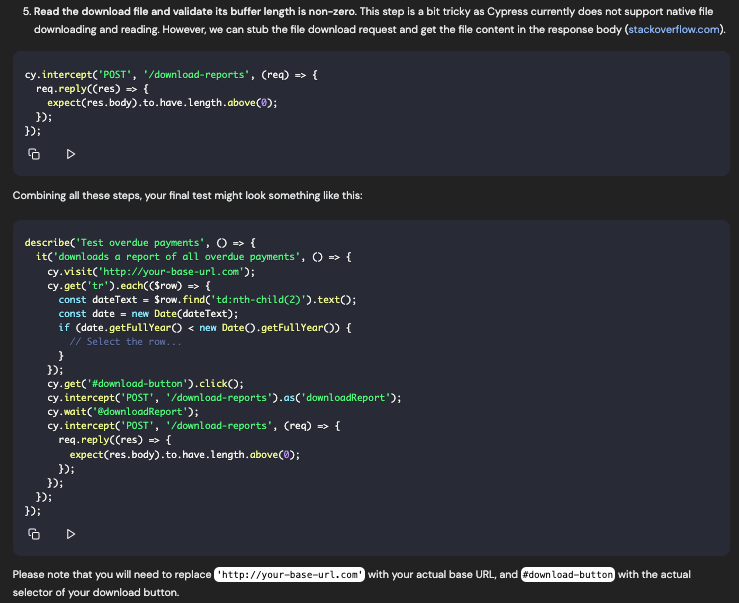

Let’s pretend we are developing a private React application for a bank that tracks the billing information of customers and flags individuals that have overdue payments in a table. Let’s ask Phind to write a Cypress test case that validates this fake billing table, by selecting each row that is overdue and pushing a download button to create a report containing all overdue payments.

Prompt:

### Instruction ###

Write a Cypress test that validates a customer table of billing information, selects each row in the table that contains overdue payment dates and pushes a download button which downloads a report of all overdue payments.

### Steps ###

- Navigate to the base url

- Loop through the billing table and select each row that contains a payment date prior to this year

- Click a download button

- Intercept the POST /download-reports endpoint and wait until it's completed

- Read the download file and validate it's buffer length is non-zero

Even though we provided steps of our exact use-case, without being able to provide exact technical details, AI is limited in the response it generates. As seen above, Phind returned code for our desired use-case, but it’s very generic and even acknowledges that the user will need to replace multiple lines of code. Additionally, the intercept code provided is invalid, as AI doesn’t understand that the `cy.intercept()` command needs to be defined before clicking the download button. The code is filled with holes, but it does a fantastic job at outlining what each step might look like and suggesting what Cypress commands to use along the way.

For most applications and realistic use-cases, AI won’t be able to provide explicit and exact solutions, but it can guide users on how to approach writing different test cases. The code above should be used as a framework for the test case rather than being trusted as the final solution.

AI search engines may fall short when test cases become complex or abstracted. Instead, integrated coding AI tools like Copilot are more effective, as they provide local, in-line feedback based on previous patterns and direct code context.

With chatbot AI, it’s always better to use them as guides instead of manual, coding replacements. Although the examples above demonstrate that they can provide working code, the AI responses can be finicky and may require multiple rounds of prompting. That being said, chatbot AI is a great resource for approaching different test cases and providing draft-level rounds of code to help improve development speed. AI is here to stay, and Phind serves as a fantastic gateway into a world of endless possibilities for improving one’s relationship with Cypress. The next time you write code, give AI a chance.

Happy Testing!

Note: As of completion of this blog Phind has replaced it’s Shortest Answer toggle with a new Pair Programmer toggle which explicitly assists and questions the user for specifics on their prompt in a fluid back and forth conversation.